Let me say this upfront: a human is writing this email. These days, it can be hard to tell. Robot dogs, a flirty, artificial voice in your ear, a fully automated car like Kitt in Knight Rider: it all went from fiction to real life very fast.

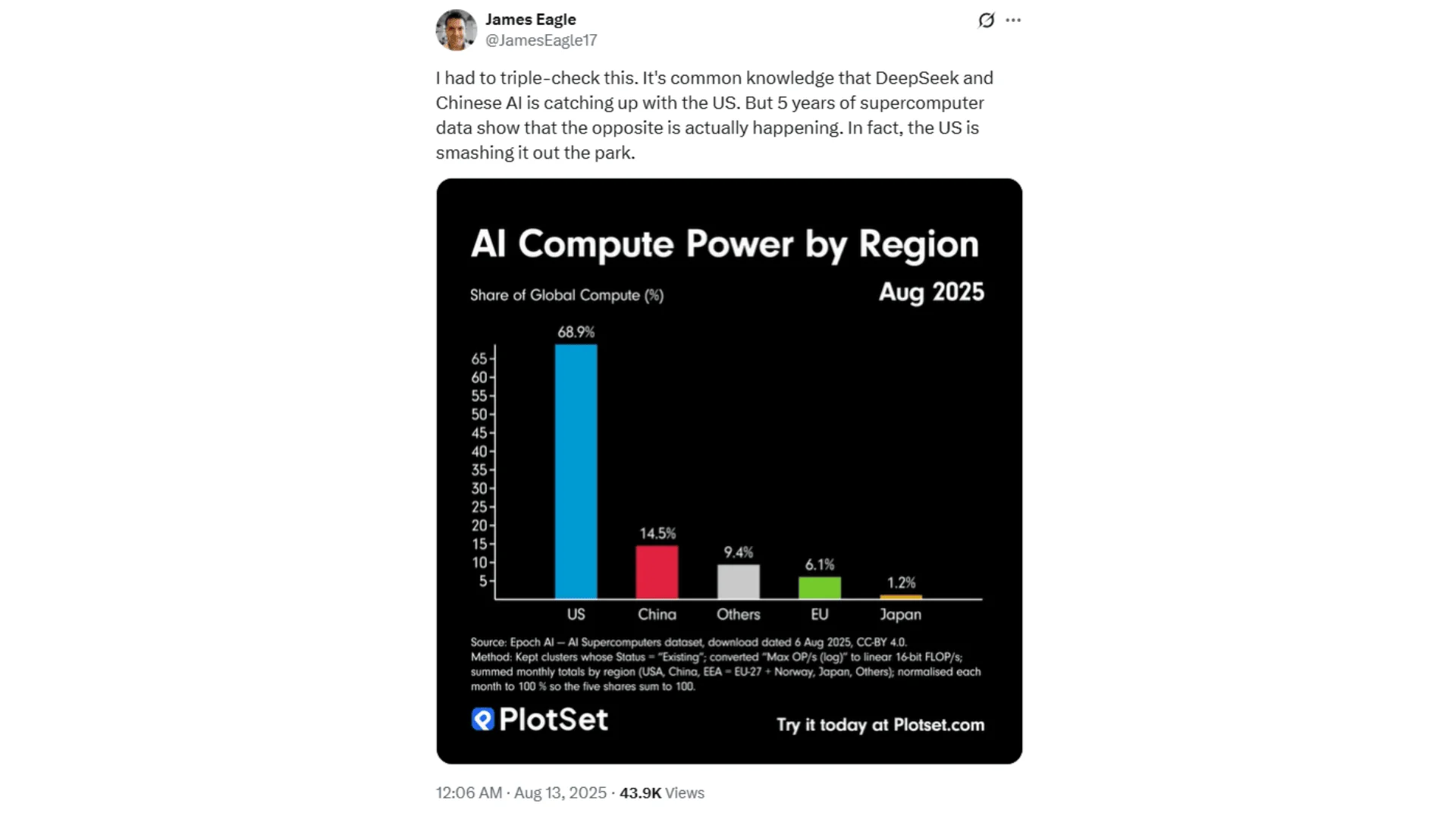

We have quickly moved from being in awe of these technologies to worrying about China's ability to dominate AI. But nearly three years after the US sparked the AI boom, the rest of the world is still playing catch-up. For now, the AI story is very much an American story.

The US currently controls 69% of the world’s AI computing power, giving it a dominant edge. The real race for now is not between US and China, but between US companies.

Different AI companies dominate regular users and enterprise

ChatGPT became a household name faster than any other company before it. It now has nearly 80% market share worldwide among chatbots as of June 2025, with over 5.7 million monthly active users.

People use it for all kinds of things. Some people have replaced Googling with searching via GPT (although its not entirely reliable). Others like digital marketer Meg Faibisch Kühn say they have offloaded most of their daily tasks to the chatbot, using it to plan schedules, write work emails and content, give ideas for recipes, and plan her garden (in these cases, GPT sounds grossly underpaid for the work it does).

Perplexity came a distant second among users with an 11% market share. Microsoft Copilot holds 4.9%, Google Gemini 2.2%, followed by Anthropic’s Claude (1.1%), and DeepSeek (1%).

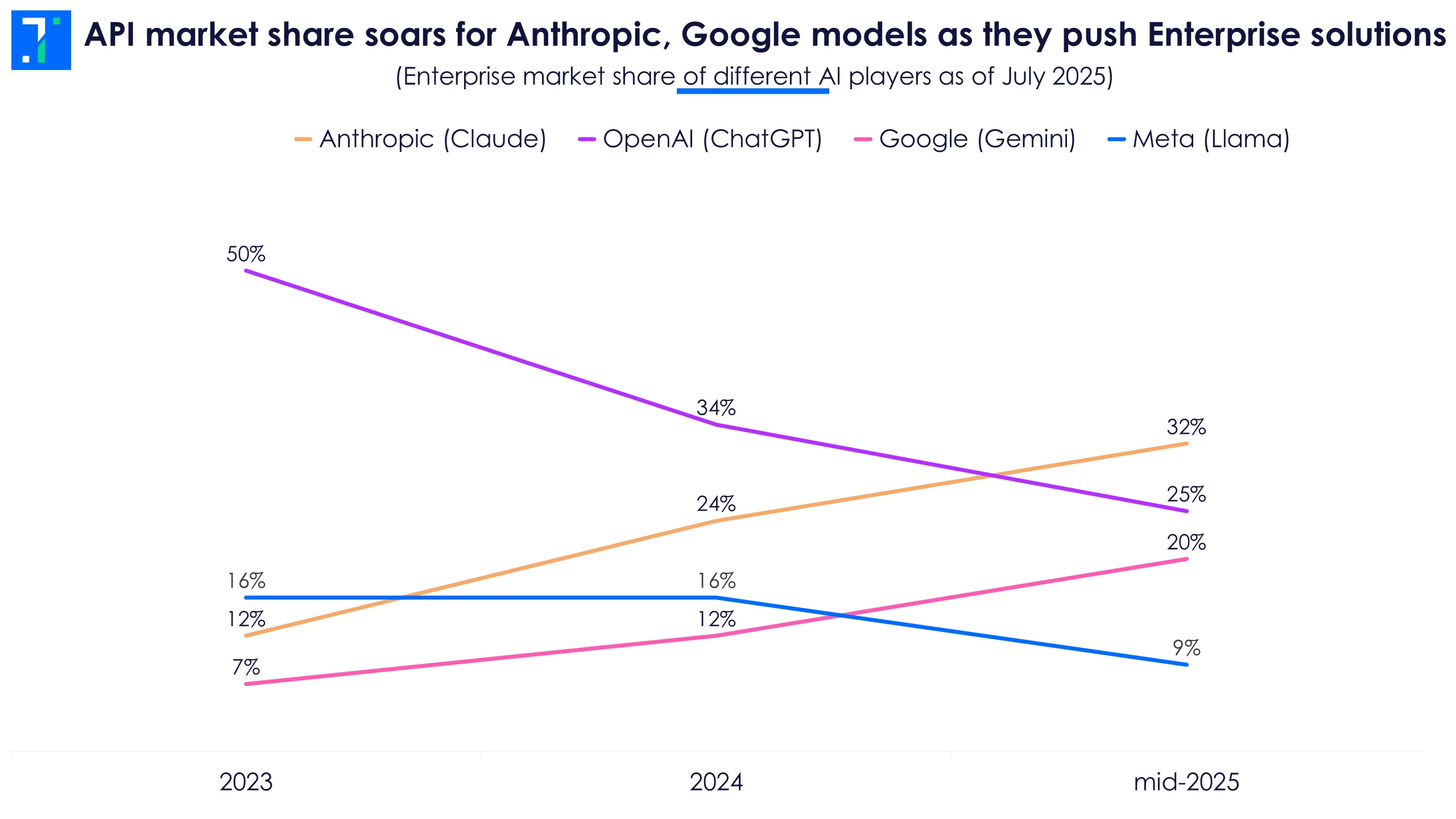

While ChatGPT dominates among everyday users, the enterprise AI scene is a different story. By the end of 2023, OpenAI held 50% of the enterprise LLM market, but its lead has since slipped to 25%—half its share from two years ago. Anthropic now leads with 32%, while Google is at 20%.

API market share soars for Anthropic, Google models as they push Enterprise solutions

Anthropic’s rise began with Claude Sonnet 3.5 in mid-2024 and accelerated with Claude Sonnet 3.7, the first agent-first LLM. By mid-2025, newer models like Claude Sonnet 4 and Claude Code made it the go-to choice for developers. Its biggest win? Code generation—Claude now owns 42% of that market, double OpenAI’s share. Google's Gemini is catching up fast to Claude and GPT.

Meta’s Llama lags with 9%, and DeepSeek, despite its splashy debut earlier this year, holds just 1%.

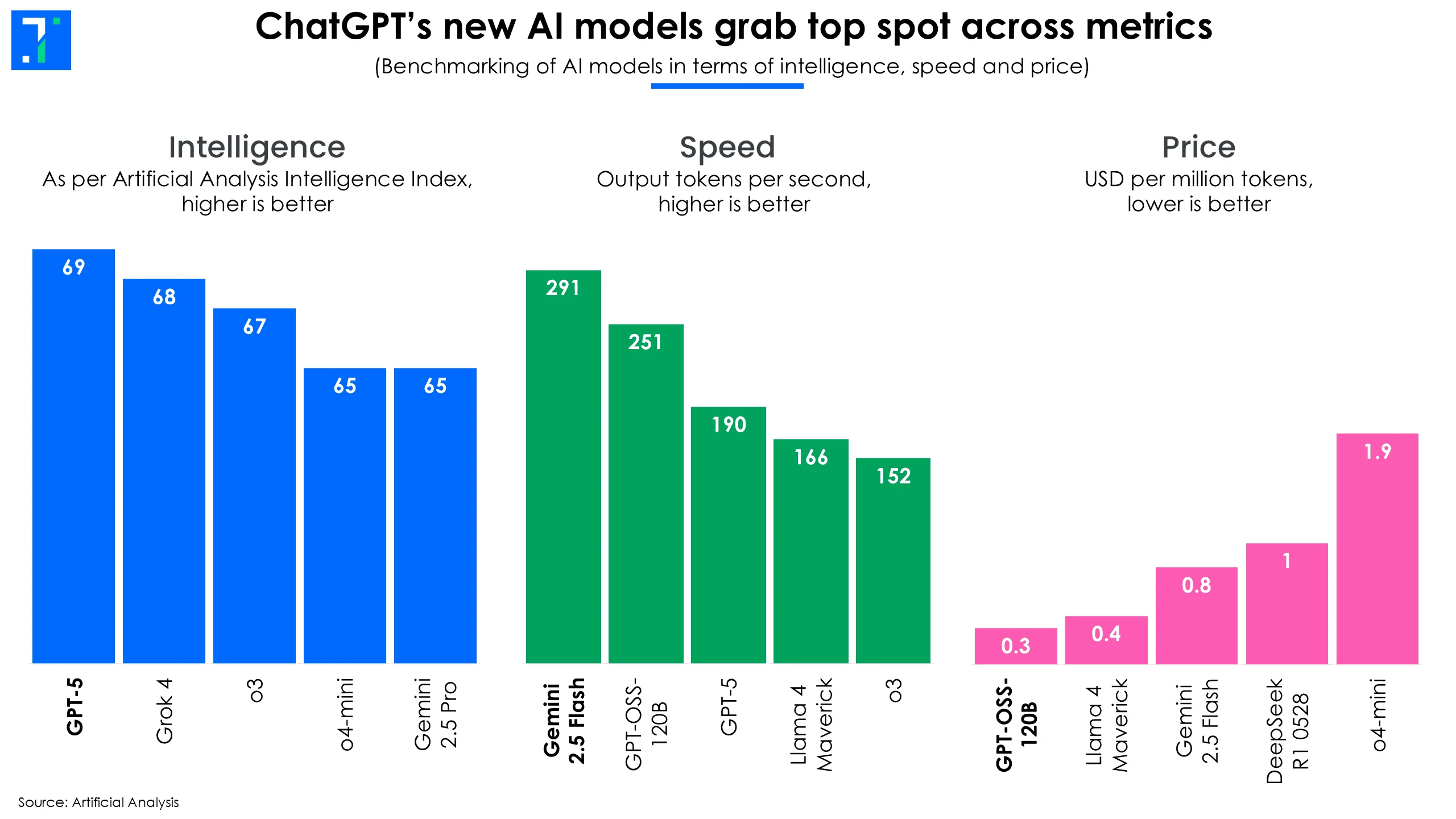

Tug of war: AI models try to balance between intelligence, speed and price

Every AI company is playing a tricky, three-way tug-of-war: make the smartest model, make it lightning fast, and make it cheap enough that customers don’t faint when they see the bill.

OpenAI’s latest model, GPT-5, which came out on August 7, has quickly gained the top spot in terms of Intelligence as per the Artificial Analysis Intelligence Index. OpenAI CEO Sam Altman says the model is “significantly better” than its predecessors. In a briefing ahead of launch, Altman said, “GPT-5 is the first time that it really feels like talking to an expert in any topic.” The actual rollout however, got mixed reviews from users.

ChatGPT’s new AI models grab top spot across metrics

But intelligence isn’t everything (any nerd trying to snag a prom date can confirm this). Speed is where Google’s Gemini 2.5 Flash steals the show, clocking in as the fastest major model right now.

OpenAI’s surprise runner up in this category is actually its new open-source model, which keeps up in many benchmarks despite being much cheaper to run.

Then there is the cost game. OpenAI’s open-source release has 120 billion parameters, but activates only 5 billion at a time through expert routing. This selective firing of neurons means you pay less for similar quality of answers, making it a hit with developers watching their API bills.

AI firms raise billions to train new models, and are still losing money

Building the smartest AI isn’t just about algorithms and talent — it’s also about having a bank account big enough to run a small country. Analysts estimate the largest training runs could exceed a billion dollars in cost by 2027.

OpenAI recently raised over $8 billion as part of its $40 billion fundraise planned for this year, at a valuation of $300 billion. This is to fund the company’s expansion plans and massive compute bills. In 2024, OpenAI reported a loss of around $5 billion on $3.7 billion in revenue. For 2025, it’s bracing for an $8 billion cash burn.

Currently, most AI firms like ChatGPT are burning more than one dollar to generate every dollar in revenue. However costs are coming down fast at the unit level (per token or query) due to advances in software and hardware.

Anthropic, the new enterprise AI darling, is in its own arms race. It pulled in $3.5 billion in March at a $62 billion valuation — and now it’s reportedly negotiating another $3–5 billion round at almost triple that price tag. The firm’s revenue shot up from about $1 billion annualised in December last year to $3 billion by July this year, with some trackers betting it could hit $5 bn in topline by year-end.

However, neither OpenAI nor Anthropic is footing these bills alone. Both rely heavily on hyperscalers such as Microsoft, Amazon, and Google, who not only pour in investment cash but also offer cloud credits and discounted compute power. It’s a symbiotic deal: AI labs get to train massive models without going bankrupt, and cloud giants lock in years of juicy infrastructure revenue.

Big Tech, in response, is going all in to build AI data centers, networking, and custom silicon. Microsoft, Alphabet, Amazon, and Meta together plan well over $350 billion in 2025 AI-related spending to meet soaring demand for training these models and run queries from users across the globe.

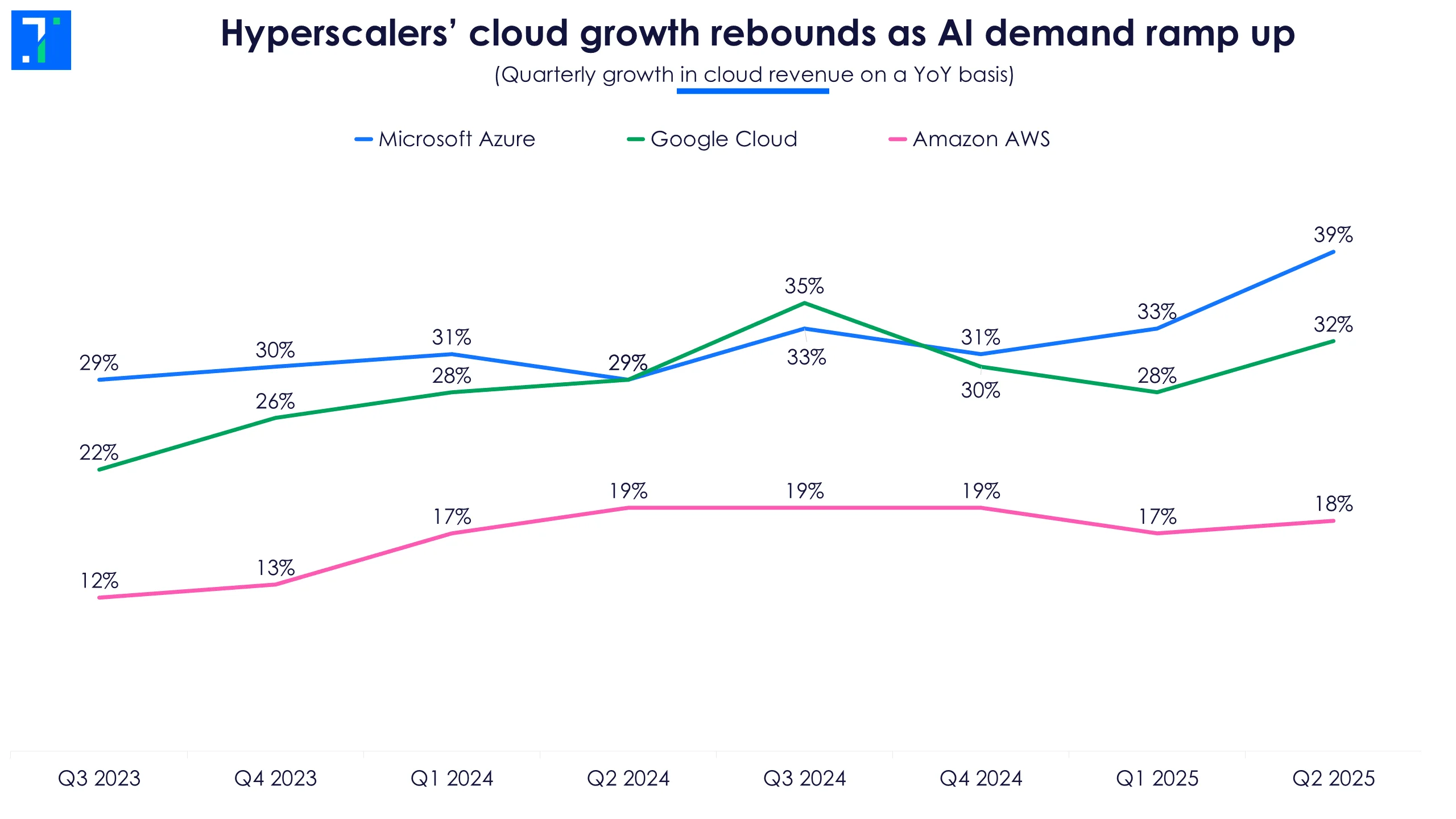

And this isn’t just a future bet. Demand from AI players is already lifting cloud revenues for tech giants, with Microsoft leading quarterly growth thanks to its deep partnership with OpenAI.

Hyperscalers’ cloud growth rebounds as AI demand ramp up

China plays catch-up

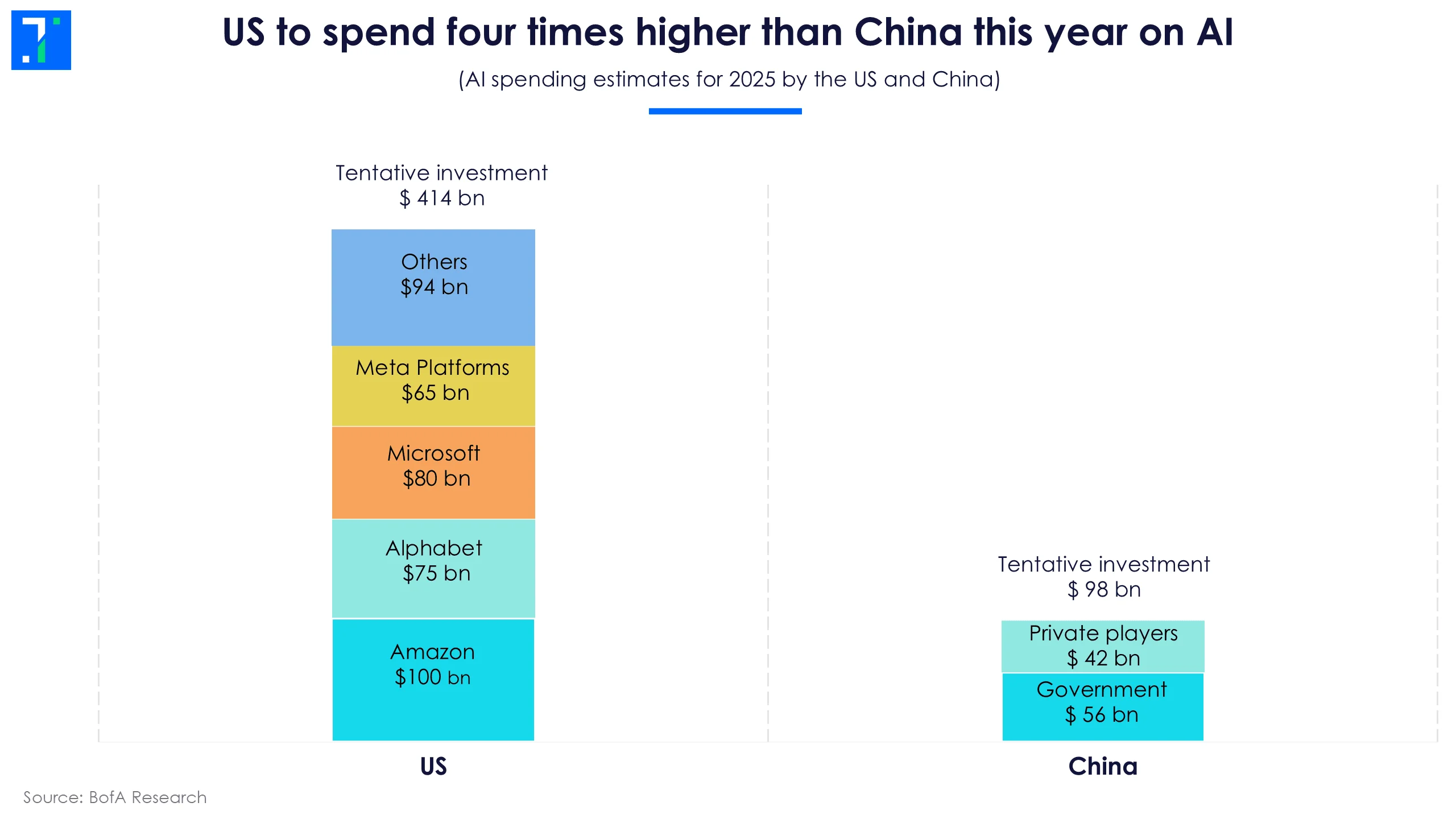

Unlike in the US, where private companies lead AI development, the Chinese government is driving much of the investment in the country’s AI infrastructure. Bank of America expects China’s AI spending to reach $98 billion in 2025, with $56 billion from the government and $42 billion from private players for data centres and energy needs. However, this comes to about 25% of what the US is expected to spend this year.

US to spend four times more than China this year on AI

China’s AI ambitions got noticed in early 2025 with the launch of DeepSeek. Built for just $5.6 million, the model stunned the industry by matching the performance of flagship systems from OpenAI and Anthropic—each developed at costs exceeding $100 million.

Jeffrey Towson, founder of TechMoat Consulting, said, “China and the US have pulled way out front in the AI race. China used to be one to two years behind the US. Now, it is likely only two to three months behind.”

In their latest move to close the gap with the US, Chinese firms plan to deploy over 115,000 Nvidia H100 and H200 GPUs across 39 massive data centres rising in the country’s western deserts. The goal: to train models on par with those from OpenAI. These chips, however, are among the advanced AI processors the US has banned from export to China—making the buildout both a technological milestone and a geopolitical flashpoint.

The US ban on exporting advanced AI chips is aimed at keeping its lead in the global AI race. The restrictions have cut China off from Nvidia’s most powerful processors, including the flagship B200 Blackwell chip. Recently, after the US demanded a 15% cut in chip sales to China, the Chinese government urged local players to use homegrown chips for national security reasons.

The competition is on. For now, unless Chinese companies spring another surprise, the US is well in the lead in both models and market share.